Marketers are spending $16.4 billion on influencer marketing each year, up by 18.8% in 2022, but brands still struggle to measure the impact of all those dollars spent.

Measuring the ROI of influencers was something I spent a lot of time thinking about as I built the marketing science team at Harry’s – a DTC men’s shaving brand – so I know how hard it can be. We quickly found you can’t rely on promo codes alone: too many customers forget to use them, and less reputable affiliates are tempted to share codes through coupon exchange portals to earn more clicks.

Instead promo codes need to be part of a wider, more holistic approach to measurement, that integrates digital tracking and Marketing Mix Modeling (MMM), working together to eliminate wasted media spend on campaigns that aren’t driving incremental sales.

We’ll start by covering the measurement issues you might be finding with your influencer marketing campaigns. Next we’ll give a brief introduction to Marketing Mix Modeling, what its strengths and limitations are, and why modern DTC brands have adopted it.

Finally, we’ll walk through the exercise of actually building a simple marketing mix model in Excel / GSheets. You’ll see the kind of data you need, how the technique works, and what kind of decisions you can make with the output.

Measuring the impact of influencers

Influencer marketing is one of the less measurable digital channels, because it’s “off-book” – rather than paying a platform like Meta (Facebook & Instagram) for advertising, you’re typically contacting individual influencers and working with them directly.

Results often vary, as a great deal of campaign success comes down to 1) how relevant your product is to an influencer’s audience, 2) if the influencer is passionate about your product, and 3) the creative execution of your campaign. There’s also the problem of fraud. In 2021 for example, 49% of Instagram Influencer accounts were impacted by fraud, which leads to marketers having to check for fake followers. The FTC and social media platforms are also cracking down on proper disclosure of advertorial posts by influencers.

One of the primary methods for measurement most brands use is the tried and true promo codes. This technique dates back to the 1920s, during the Great Depression when coupon usage was at its height. Early scientific marketers like Daniel Starch and Claude Hopkins pioneered the practice of assigning unique promo codes to different ad copy variations in order to test their effectiveness.

This simple but effective technique unfortunately has significant flaws when used in today’s digital economy.

- Browser extensions like Honey or Rakuten auto-fill promo code form fields with with whatever offers the greatest discount

- Savvy shoppers have been trained to Google for "[brand] promo codes" on reaching checkout, to see if they can get any money off their order

- Affiliate fraudsters practice “cookie stuffing” to tag as many people as possible with their affiliate code, taking a rake on whatever they purchase

In none of these scenarios are the sales recorded “incremental” – they still would have happened if you didn’t have a promo code form field – so any money paid out to affiliates or discounted from the price of the product was wasted.

On the other side of the ledger, many customers just simply forget to use their promo codes! Or perhaps they didn’t notice the code buried in the text of the post. Either way, any marketer with experience running influencer campaigns will tell you they see spikes in sales when influencer campaigns have gone live, over and above what they could explain with tracking.

Marketing mix modeling explained

Marketing Mix Modeling (MMM) was introduced in the 1960s to match spikes and dips in sales to actions taken in marketing (and external factors). When done well, it can tell you the incremental impact of each part of your marketing strategy, so you can make data-driven decisions on where to allocate your marketing budget.

Historically, it was primarily used by large advertisers, the kind spending millions on SuperBowl ads, but has been adopted by modern DTC brands in the wake of Apple iOS14 privacy changes, GDPR/CCPA and ad blockers limiting marketers' ability to track users. MMM works without needing any user-level data, so it’s perfect for hard to track channels like TV, Podcasts, and Influencers.

Once you’ve built a model you’ll be able to determine how much to spend on each marketing channel, re-allocating budget from poor performing channels to those with more room to scale. It serves as a second-opinion to your digital tracking and analytics, often being the tiebreaker between what Google Analytics, your promo code tracking, and the “how did you hear about us” survey says about what channels deserve credit for sales.

Marketing Mix Models can be difficult to get right, and sometimes require advanced statistical knowledge, for example familiarity with Bayesian algorithms. They offer more of a top down, channel by channel view of overall impact, rather than being able to drill down into individual campaigns, creative/message, or tactics. That said, if you get it right, MMMs are a valuable part of the modern attribution stack.

Marketing mix modeling tutorial

To follow along, check out the template from this post, based on data for a fictional ecommerce company, taken from the marketing mix modeling courses at Vexpower:

For a large enterprise brand, a Marketing Mix Model can be a complex project, taking multiple people up to 3 months to complete, and ongoing work to maintain. If you’re in that situation it makes sense to use an existing library like Meta Robyn, or try a fully automated marketing mix modeling tool like what we offer at Recast.

However as an SMB or start-up you can get to a “good enough” model in an afternoon using nothing but Excel or Google Sheets.

Step 1: gather data

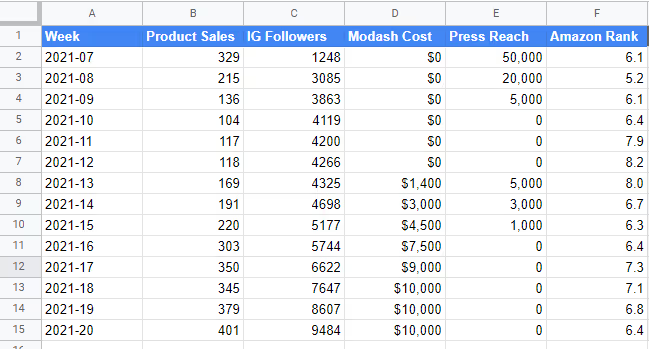

You need data from across your marketing mix, anything you pay money for that drives sales, so that the model can separate the impact of influencers from everything else. It needs to be in tabular format as shown, which means one row for each day or week, and one column for each channel or campaign.

Note: When you’re working with channels like YouTube, you’re unlikely to get all the results on the day or week the content is posted. If you’re expecting long term ongoing traffic, this model will not attribute those long-term benefits.

—

Pro Tip for Modeling Influencers:

Match spend to your posting schedule

The trick is to match up what you spent on each post with when that post went live. Otherwise the model will be looking in the wrong place for impact. Allocating spend to the day the deal was closed is a common misstep, as is grouping spend to the calendar week or month.

—

Ideally with every media channel, you want to measure the effect of spend: how many dollars do you get back for every $1 spent? Meaning, if possible, you should model the actual cost of your campaigns, not the reach, impressions, followers, or other easy to game metrics.

You may also want to control for impactful external variables, like in this case your Amazon Ranking or PR, though you should be careful how you incorporate them.

Step 2: build model

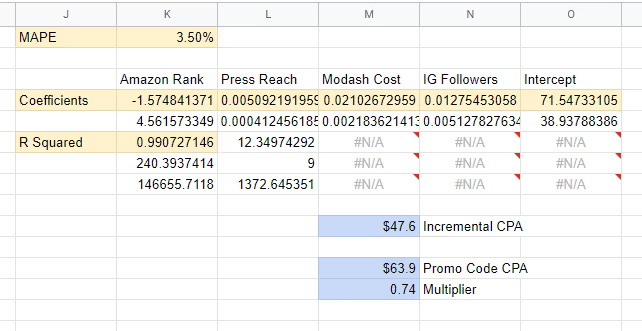

This inscrutable pile of numbers is the result of the LINEST function, available in both Excel and GSheets. The top line are the coefficients (note: they come out in backwards order), which tell you the impact of each marketing activity (presuming the model is correct).

You can interpret the coefficients as follows. For Modash Influencer Campaigns the coefficient is 0.02, meaning that for every $1 of budget you got 0.02 sales. If you divide 1 by 0.02 you get the Cost Per Acquisition (CPA), which in our model is $46.60. You can compare this to the number your promo code tracking gives you, which may be higher or lower.

The multiplier is the ratio between these two numbers, which you can use as a rule of thumb when estimating the ‘true’ ROI of your campaigns going forward. For example if you run a new campaign that shows a $50 CPA by way of promo code tracking, with a 0.73 multiplier, you can be reasonably confident the “true” CPA was closer to $37 (50 x 0.73 = 37).

Step 3: validate model

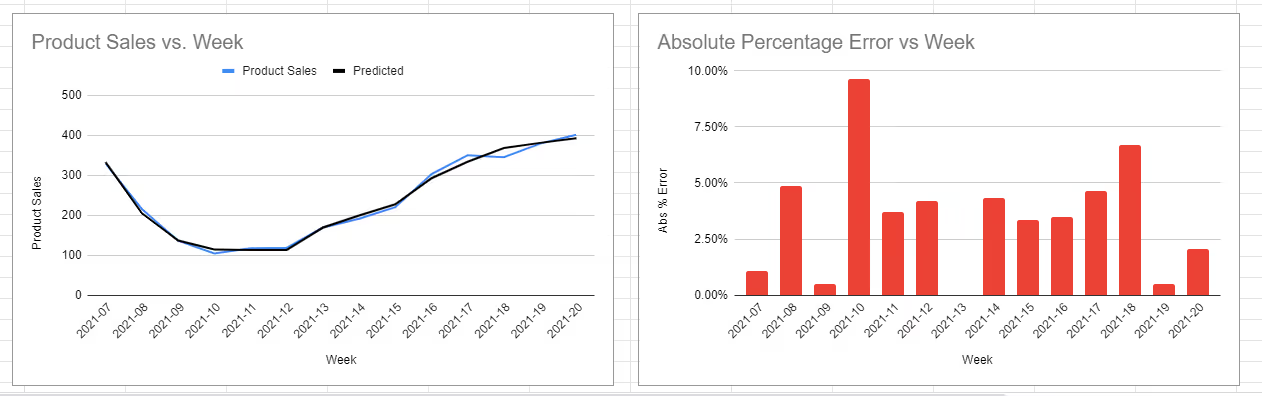

In our case we can see that the model very cleanly predicts past sales. The MAPE – a measure of accuracy/error – is usually below 5% in any given week, and sitting at 3.5% overall. This is a good start but it’s not sufficient!

Next up we should check that our model can predict new data accurately that it hasn’t seen yet: for example the next 4 weeks worth of sales.

It’s also important that the model is plausible: if it had told us our influencers were driving sales at $5 not $50, that would be hard to believe, and a sign there’s something wrong with the model. In any event these models should be validated and calibrated against what you’ve learned from other marketing attribution methods, including and especially randomized controlled experiments (A/B tests).

As you push for higher accuracy and statistical validity you’ll start to want to adopt more advanced techniques like Bayesian MMM, as well as measuring the lagged impact of your influencer campaigns (how long after the post do sales still occur?) and the diminishing returns (how much can you spend before it gets saturated?), and a number of other important features of a good Marketing Mix Model. This is where you need to start learning advanced MMM techniques, getting a data scientist on hand, or hiring a vendor like Recast that can handle this complexity and make sure your model is accurate.

.avif)

.avif)